Using our predictive model we placed 268 bets over 30 weeks on Bet365. Other then a few exceptions early, we exclusively bet on Top20s. A detailed summary of our results can be found here.

First, a bit on the model’s performance, and then a few thoughts.

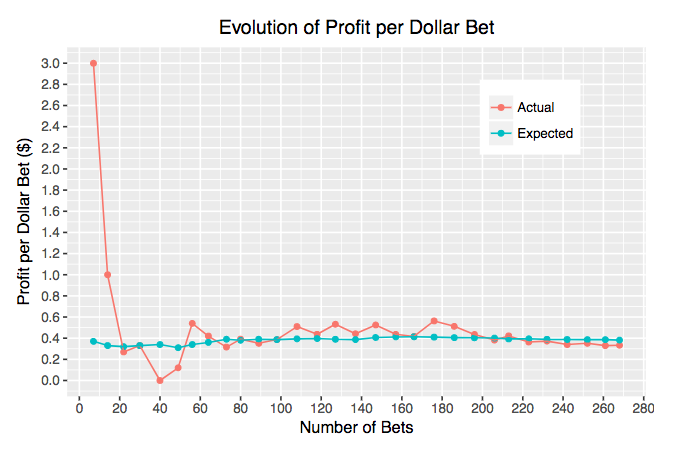

Here is a graph from the summary document that reflects quite favorably on the model:

Simply put, we see that our realized profit converges to the expected profit, as determined by the model, as the number of bets gets large. I think this is some form of a law of large numbers (it’s not the simple LLN because the bets are not i.i.d.). This is suggestive evidence that the model is doing something right.

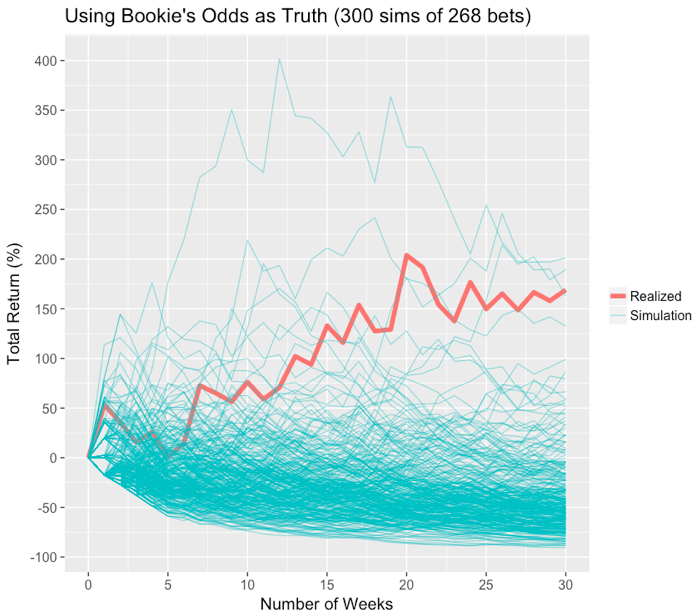

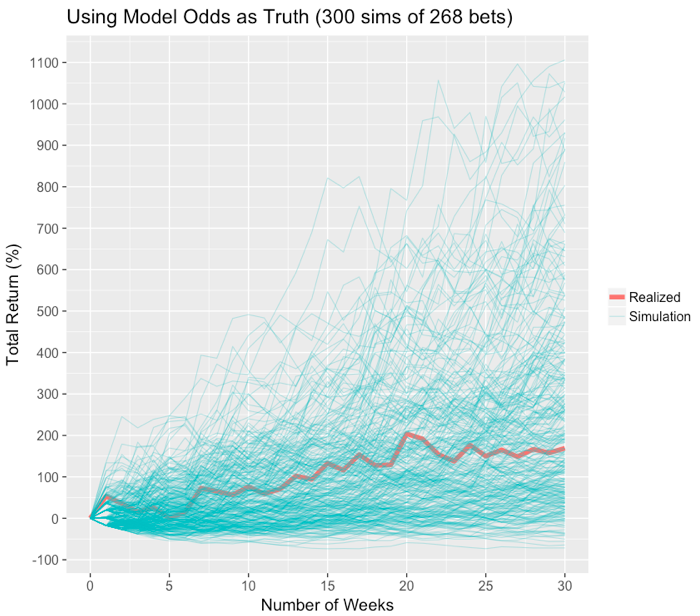

Next, I want to show two graphs that I put up previously when discussing the model’s performance through 17 weeks:

The first graph simulates a bunch of 30-week profit paths assuming that the bookie’s odds reflect the true state of the world. You can see the mean is around -40% or so, which is due to the fact that the bookie takes a cut. Our actual profit path is also shown (in red) and we see that we beat nearly all the simulated profit paths. This tells us that it is very unlikely our profit path would have arisen purely due to chance.

The second graph again shows some simulations, this time assuming that the model’s odds reflect the true state of the world. We see that the realized profit path is pretty average, conditional on the model being true.

A final angle that you can look at to gauge our model’s performance is provided here. This basically answers questions of the following nature: the model said this set of players would make the cut “x” % of the time, so, how often did they actually make the cut?

Overall, I think all of these methods for evaluation show that the model was pretty successful.

So, what did we learn? We had never bet before, so perhaps some of these *insights* are already well-known.

First of all, I think in developing this model we appreciate more how *random* golf really is. Even though our model seems to be “well-calibrated”, in the sense that if it says an event will happen x % of the time, it usually does happen about x % of the time, it does not have much predictive power. In statistical parlance, we are only able to explain about 6-8% of the daily variation in scores on the PGA TOUR with the model; the rest of the variation is unaccounted for.

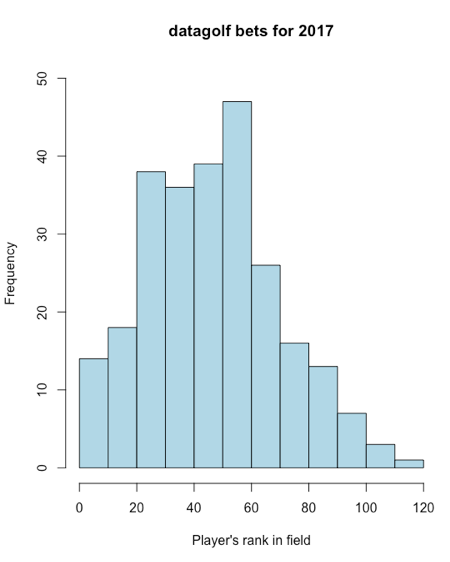

Second, and this is definitely related to the point above, our model generally likes the lower-ranked golfers more, and the high-ranked golfers less, than the betting market does. For example, of our 268 bets, only 15 were made on golfers ranked in the top 10 of the field that week (we determine rank based off our model). More generally, the average rank in a given week of the players we bet on was 48th; here is a full histogram:

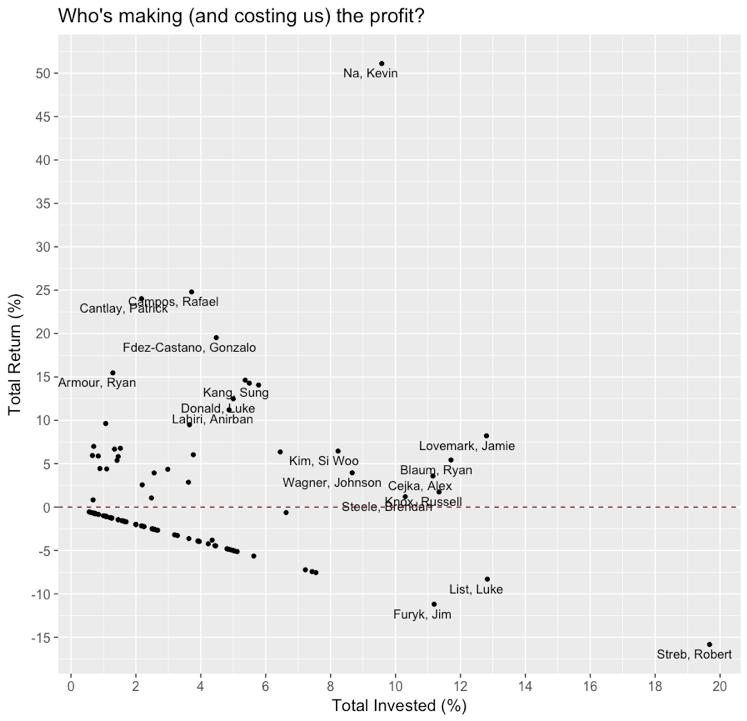

So why did our model view the low-ranked players more favorably than the betting sites? Well, it could just be that the majority of casual bettors like to bet on favorites (because they want to pick “winners”, as opposed to good value bets). Betting sites therefore have incentive to adjust their odds to reflect this. However, it could also be that our model acknowledges, to a greater degree than the oddsmakers do, that a large part of golf scores cannot be easily be predicted. As a consequence, our model doesn’t predict that great of a gap between the top-tier players and the bottom-tier players in any given week. For reference, here is a graph outlining some of the players we bet on this year:

So why did our model view the low-ranked players more favorably than the betting sites? Well, it could just be that the majority of casual bettors like to bet on favorites (because they want to pick “winners”, as opposed to good value bets). Betting sites therefore have incentive to adjust their odds to reflect this. However, it could also be that our model acknowledges, to a greater degree than the oddsmakers do, that a large part of golf scores cannot be easily be predicted. As a consequence, our model doesn’t predict that great of a gap between the top-tier players and the bottom-tier players in any given week. For reference, here is a graph outlining some of the players we bet on this year:

Third, our model valued long-term (2-year) performance much more than the market. As a consequence, we would find ourselves betting on the same players many weeks in a row if that player got in a bad rut. For example, Robert Streb was rated pretty decently in our model at the start of 2017 due to his good performance in 2015/2016. But, as 2017 progressed, Streb failed to put up any good performances. The market adjusted pretty rapidly by downgrading Streb’s odds after just a few weeks of bad play, while the model’s predictions for Streb didn’t move much because it values longer-term performance a lot. As a consequence, we bet (and lost!) on Streb for many consecutive weeks, until he finally came 2nd at the Greenbrier; at which point the market rebounded rapidly on Streb’s stock, so much so that we didn’t bet on him much for the rest of the year. It’s important to note that we don’t arbitrarily *choose* to weight 2-year scoring average heavily. The weights are determined by the historical data used to fit the model; whatever predicts best gets weighted the most. Long-term scoring averages are by far the most predictive of future performances, and the model weights reflect this. In fact, for every 1 stroke better (per round) a player performed in his most recent event, the model only adjusts his predicted score for the next week by 0.03-0.04 strokes!

Fourth, our model did not use any player-course specific characteristics. This stands in opposition to the general betting market, which seems to fluctuate wildly due to supposed course fit. A great example of this was Rory McIlroy at the PGA Championship at Quail Hollow this year. Rory went from being on nobody’s list of favorites in the tournaments during the preceding weeks, to the top of nearly everyone’s at the PGA. In contrast, we made no adjustment, and as a consequence went from being more bullish on Rory than the markets at the Open, to less bullish at the PGA. It’s not necessarily that we don’t think that these effects exist (e.g. Luke Donald does seem to play well at Harbour Town), it’s simply that we don’t think there is enough data to precisely identify these effects. For example, even if a player plays the same course for 8 consecutive years, this is still only 32 rounds, at most. Even this is not a lot of data to learn much of value. And, in most cases, you have much less than 32 rounds to infer a “course-player fit”. When a list of scoring averages, or some other statistic, are presented based off of only 10, or even 20 rounds, this list should be looked upon skeptically. With a small sample size, it’s likely that these numbers are mostly just noise. Regarding the Luke Donald/Harbour Town fit: even if there are no such things as course*player effects, we would still expect some patterns to emerge in the data that look like course*player effects just due to chance! This becomes more likely as the sample of players and courses grows. Essentially this is a problem of testing many different hypotheses for the existence of a course*player effect: eventually you will find one, even if, in truth, there are none.

Fifth, and finally, I think it is incredibly important to have a fully specified model of golf scores because it allows you to simulate the scores of the entire field. Unless you have a ton of experience betting, it would seem, to me, to be very difficult to know how a 1 stroke/round advantage over the field translates into differences in, say, the probability of finishing in the top 20. By simulating the entire field’s scores, you are provided with a simple way of aggregating your predictions about scoring averages into probabilities for certain types of finishes.