[Last updated: November 6th, 2017]

This is going to be a running blog post where, from time to time, we review the past week’s tournament through the lens of our live predictive model of scores. If you are unfamiliar with our predictive model, a good starting point is reading about how we predict tournaments before the events starts. To go from pre-tournament predictions, to live predictions throughout the event, we only make a few adjustments, which we’ll touch on at various points in this blog. Roughly, what we do account for once the event starts is how hard different holes are playing, and the persistence in performances from one round to the next (i.e. playing well in round 1 does affect how we predict rounds 2-4); what we do not account for is within-round persistence in performance (i.e. playing well on hole 1 does not affect how we predict holes 2-17) and hole*player-specific effects (i.e. all holes are either harder or easier than average for all players).

With this blog post we hope to achieve a few things: first, to provide readers with a unique way of looking at how a golf tournament played out; second, to allow readers to better understand how our model works and how to think about probability in golf; and third, to provide a, at times, lively and humorous read. Enjoy!

Nov 6th, 2017: Alex Cejka comes back from the dead at the Shriners

For almost the entire final round on Sunday at the Shriners, the model was giving Alex Cejka less than a 0.5% chance of winning (and at various points a 0.0% chance!). In the end, he fired an 8 under 63 to get himself into a 3-man playoff, which he ultimately lost. How worried should we be about our live model if it’s giving a player a 0% chance of winning at some point in the event, but then that player ends up in a playoff?!

Further, recall that last week our predictive model gave Justin Rose basically no chance of winning (<0.5%) for much of the final round, and he went on to win! So, what’s up? Is something wrong with our model?

First, consider this question: what is the probability that the eventual winner of a tournament had a live win probability lower than 1% at some point throughout the event?

As it turns out, this is actually pretty likely to happen. At the Shriners, the start-of-tournament win probabilities showed that there was a 45% chance of the winner being a player who had a pre-tournament win probability less than 1% (to get this, I just add up the win probabilities for all players with win prob. < 1%). Further, this 45% number will be a lower bound. As the tournament progresses, if any of the players who started with an above 1% win probability has their live win probability dip below 1%, this will increase that 45% number slightly. You could definitely figure out the exact answer to the question posited at the start of the paragraph, but for now let’s just leave it at greater than 45% (for the specific example of this past week’s event).

Another way to understand when we should worry about the model getting something (seemingly drastically) wrong is to recall how we evaluate our predictive model. In a nutshell, to evaluate the probabilistic forecasts, we look at, for example, how many times the model said event “X” (e.g. winning) would happen with a probability of 1%; then, we check the final outcomes for these predictions and see how many times event “X” (winning) actually happened. If it turns out that 1% of the set of players who were predicted to win with probability 1%, did in fact win 1% of the time, then we say the model is doing its job correctly. Therefore, the reason we should not (necessarily) be worried when Justin Rose, or Alex Cejka, goes on to win (or, almost win) despite having a very low win probability at some point during the event, is that there were many other players who had these very low win probabilities, too. Therefore, while Justin Rose did happen to win last week while having just a 0.2% (or so) win probability (according to the model) at the start of the round, there were many other players who had a 0.2%ish win probability at the start of the round who did not win. If the model gives 2000 players (over the course of several weeks) a 0.2% chance of winning at some point, we expect 0.002*2000 = 4 of these players to go on to win. It just so happens that Rose (and almost Cejka) was one of those 4. (All of this is not to say that the model isn’t getting things wrong – it might be, but we can’t really say whether this is true just using the last couple weeks’ data.)

It’s a bit like thinking about who ends up winning the lottery: before the winner’s name (e.g. William Nilly) is pulled, William Nilly has just a 1 in 10 million shot at winning. So when he wins, we say, “wow! That event (Mr. Nilly winning the lottery) had only a 1 in 10 million chance of happening, and it did!!” But of course, somebody has to win the lottery – indeed, we can safely predict with 100% certainty that the winner will be someone who had just a 1 in 10 million shot at winning it (assuming everybody buys just a single ticket) before the winning ticket was pulled, we just don’t know who it will be. To relate this to winning a golf tournament, it is easy to be shocked by a longshot winner when it happens, but keep in mind that somebody had to win the tournament, and if half the field is composed of so-called “longshots”, it’s really not that unlikely that one of them goes on to win.

Okay, moving on. Now I want to briefly analyze one of the scenarios yesterday where Cejka was not getting any love from the model. This should help readers understand what the model does well and what its limitations are.

At 2pm PST, Cejka was finished in the house at -9, and the model was giving him a 0% chance of winning (this is based off 5000 simulations, so it’s likely not truly 0% – if we simulated 10000 times and didn’t round the number, it would probably not be exactly 0).

The notables at the top of the leaderboard at this time were Spaun at -10 (thru 11, 41% win prob.), Cantlay at -9 (thru 12, 26% win prob.), Hadley at -9 (thru 12, 19% win prob), Hossler at -8 (thru 11, 6% win prob.), Bryson at -8 (thru 14, 2% win prob.) and then Kim at -7 (thru 11, 2% win prob.). And, as I mentioned, Cejka was in the house at -9.

The main reason the model deemed it to be so unlikely that Cejka would win, despite being just 1 shot back of a single player, was because the finishing holes were playing very easy: holes 13-16 were playing roughly 2 shots under par, and 17-18 were playing around even par at the time. Therefore, the effective leaderboard really had Spaun, Cantlay, Hadley, and Hossler 2 shots better than what they were at, and Bryson 1 shot better. The question (or, one of the questions) to be answered at this point is: how likely is it for Spaun to shoot 1 over par on a 7 hole stretch where the average player is shooting 2 under? Spaun is a bit better than the average player in this field, and he will be 1 over or worse on this stretch roughly 5% of the time. We can then make similar calculations for Cantlay (he will be E or worse 11% of the time, roughly), Hadley (he will be E or worse roughly 13% of the time), Hossler (he will be 1 under or worse 27% of the time), and finally Bryson and Kim (they will be 1 under and 2 under or worse, respectively, about 50% of the time).

Well… if I haven’t lost you in that incredibly long sentence, we can now understand why the model wasn’t optimistic about Cejka’s chances. To get into a playoff, or win outright, all of the events I described above had to happen. Assuming the events are independent (i.e. Spaun playing terrible down the stretch will not be related to Hadley playing terrible down the stretch), the probability of all the events happening is:

5% * 11% * 13% * 27% * 50% * 50% = 0.005%!

This is only a rough calculation, as there are other players that should be involved – but this should be an upper bound for Cejka’s win probability at 2pm PST!

Now, there could be some problems with how I’ve just calculated this. First, the model is always using the performance of players earlier in the day to determine how difficult holes are playing for the players still on the course. Therefore, if the wind picks up (as it did), the model is not accounting for this. So, while in the model, the last 7 holes were playing 2 under par for an average player, in reality because of the changing conditions, they were likely playing a bit harder. That is probably the most important point. Second, it’s also possible that my assumption which stated that how Spaun plays in the final few holes and how Hadley plays in the last few holes are independent events is false. The reason? Golf is a strategic game: at the end of a tournament especially, I will play different depending whether I am leading or chasing. Therefore, players’ scores may be correlated with each other, and this would make the math I did above result in a lower probability than it should have. Third, there is no accounting for *choking* in the model, which looked like it played a role in how the last few holes played out at the Shriners.

To conclude, in this case I think using hole-specific scoring averages from earlier in the day likely caused some issues for the model’s predictions later on (because the wind picked up and the holes played much harder than earlier). In reality, Alex Cejka’s win probability was likely closer to 1% than to 0% when the leaders had 6-8 holes to go. However, for reasons pointed out earlier, we should never be too surprised when the winner of a golf tournament has a very low live win probability at some point during the event.

October 29th, 2017: WGC-HSBC Championship

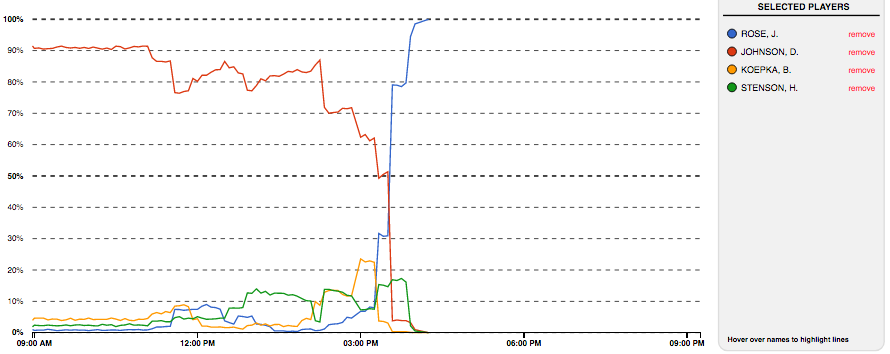

As one of 15 viewers who took in the entire final round telecast in China last night, I was treated to a wild back 9 that ultimately saw Justin Rose overcome an 8 strokes deficit at the start of the day to win by 2. Here is the tale of the tape in the final round, according to our model:

11:00am (DJ: -17, Koepka: -11, Stenson: -10, Rose:-9)

To start the day, our model was giving Rose (starting 8 back) just a 0.7% chance of winning, while Dustin Johnson, who led by 6 over Brooks Koepka (4.6% chance to start the day), had a 91% chance of closing it out. The other relevant player was Stenson, who began 7 back with a 2.4% chance of winning.

To start thinking about golf probabilistically, consider this: suppose we have an 18-hole match between two equal players, with typical “standard deviations” (i.e. a measure of consistency from round-to-round), and player 1 has a 6-shot advantage over player 2 at the start of the day. Through simulations, it is the case that player 1 will win about 92% of the time when he starts with a 6-shot advantage. Here, DJ is actually a slightly better player than any of his 3 pursuers; so why is he only getting a 91% chance of winning? The reason of course is he has to beat not one player (as in my example), but three players.

11:35am (DJ (thru 2): -15, Koepka (thru 2): -11, Rose (thru 3): -11, Stenson (thru 2): -10)

Rose quickly made up 4 shots in about half an hour. DJ’s win probability was still 76%, and Rose’s was now 7%. Despite a rough start, DJ was still 4 up and in fewer than 18 holes, this is a lot to make up.

1:30pm (DJ (thru 8): -15, Stenson (thru 8): -12, Koepka (thru 8): -10, Rose (thru 9): -9)

Unbelievably, thru 9 holes Rose now has just a 0.4% chance of winning. He’s 6 back and things are not looking good. DJ has just a 3-shot advantage over Stenson, but still has an 82% chance of closing this out.

3:20pm (DJ (thru 15): -13, Rose (thru 16): -13, Stenson (thru 15): -12, Koepka (thru 15): -11)

Now, things are getting tight. DJ’s win prob is 49%, while Rose’s is 32%. Why the big difference, given that they are tied? Hole 16 is a short par 4, playing -0.2 strokes under par; DJ still had this to play, while Rose did not. This illustrates the importance of accounting for the difficulty level of the remaining holes a player has. It also illustrates how small the margins can be in golf; a hole playing 0.2 strokes easier matters, especially with just 3 holes remaining.

3:35pm (Rose (thru 17): -14, Stenson (thru 16): -13, DJ (thru 16): -12, Koepka (thru 16): -11)

Rose now has a 1-stroke lead, and a 79% chance of closing this out; Stenson sits at 17% and DJ now has just a 4% chance of coming back to win.

4:00pm (Rose (F): -14, Stenson (thru 17): -12, DJ (thru 17): -12, Koepka (thru 17): -11)

Rose is in the house with a 2-shot lead over Stenson and DJ, and a 3-shot lead over Koepka. He’s got a 98.5% chance of closing it out; DJ has a 1% chance; Stenson has 0.5%, and Koepka 0.0%. The 18th hole was playing at about even par, so the model was giving DJ and Stenson only a 1% and 0.5% chance of making eagle respectively.

In the end, neither player could make an eagle, and Rose pulled off a shocking win.